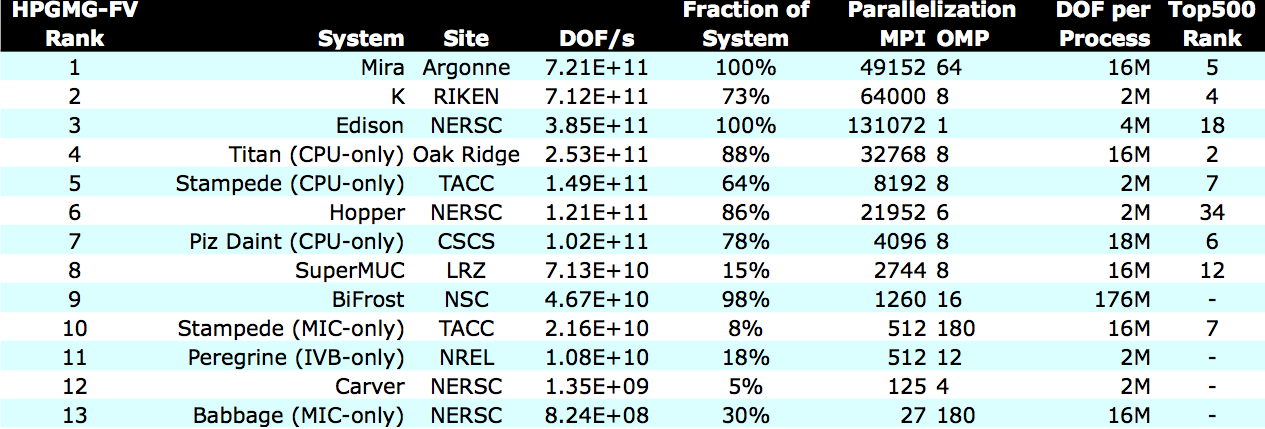

We recently received our first external HPGMG-FV submission, from BiFrost at NSC. See the HPGMG-FV Submission Guidelines if you would like to add your machine to the rankings. Here are the current rankings:

Note that some machines may be significantly under-represented due to having run on a small fraction of the machine.

Performance Versatility

Scalability depends crucially on problem size, thus time to solution. Applications such as climate, subsurface flow, statistically-converged large eddy simulation, and molecular dynamics require many time steps and, due to external requirements on time to solution, are typically run near their strong scaling limit. Such applications run best on machines with good performance at fast time to solution. Other applications, such as certain types of data analysis, are constrained by total available memory.

HPGMG-FV

This scatter plot shows performance versus solve time for the HPGMG-FV configurations run, with the area of each point indicating the problem size per thread. Hover over points for details about the machine and configuration.

This plot has only one data point per machine, typically not at the strong scaling limit or memory-capacity limit, thus provides information only about that single configuration and not the performance spectrum of each machine.

HPGMG-FE

We have broader performance spectrum data available for HPGMG-FE, though on fewer machines; here we show for Edison, SuperMUC, and Titan (CPU-only). The cluster of samples for each problem size represents performance variability. Hover over points for more details about the sample.

Note that GDOF/s is not directly comparable between the FV and FE implementations since the latter is higher order and uses general mapped grids leading to increased data requirements for coordinates and much higher arithmetic intensity. The configurations run on Edison and SuperMUC used a power of 2 cores per node, but HPGMG-FE does not require this and has sufficiently high arithmetic intensity to benefit from using all cores.

Please join the discussion on the hpgmg-forum mailing list.